Audit Logs for Confluent Cloud

Important

This feature is available as a preview feature. A preview feature is a component

of Confluent Cloud that is being introduced to gain early feedback from developers.

This feature can be used for evaluation and non-production testing purposes

or to provide feedback to Confluent.

Audit logs provide a way to capture, protect, and preserve Kafka authentication

and authorization activity into topics in Kafka clusters on Confluent Cloud. Specifically,

audit logs record the runtime decisions of the permission checks that occur as

users and service accounts connect to clusters and attempt to take actions that

are protected by ACLs.

Each auditable event includes information about who tried to do what, when they

tried, and whether or not the system gave permission to proceed.

The primary value of audit logs is that they provide data you can use to assess

security risks in your Confluent Cloud clusters. They contain all of the

information necessary to follow a user’s interaction with your Confluent Cloud clusters,

and provide a way to:

- Track user and application access

- Identify abnormal behavior and anomalies

- Proactively monitor and resolve security risks

Within Confluent Cloud, all audit log messages from your clusters are retained for seven

days on an independent cluster. Users cannot modify, delete, nor produce messages

directly to the audit log topic, and to consume the messages, users must have an

API key specific to the audit log cluster.

Prerequisites

- Kafka client

- You can use any Kafka Clients

(for example, Confluent CLI, C/C++,

or Java) to

consume from the Confluent Cloud audit log topic as long as it supports SASL

authentication. Thus, any prerequisites are specific to the Kafka client you

use. For details about configuring Confluent Cloud clients, see Configure Confluent Cloud Clients.

- Consume configuration

- Confluent Cloud provides a configuration you can copy and paste into your Kafka client

of choice. This configuration allows you to connect to the audit log

cluster and consume from the audit log topic.

- Cluster type

- Only Confluent Cloud dedicated clusters support audit logs.

Auditable events

Confluent Cloud audit logs include two kinds of events: authentication events that are

sent when a client connects to a Kafka cluster, and authorization events that are

sent when the Kafka cluster checks to verify whether or not a client is allowed

to take an action.

Note

Users may attempt to authorize a task solely to find out if they

can perform the task, but not follow through with it. In these instances, the

authorization is still captured in the audit log.

Confluent Cloud audit logs capture the following events:

| Event name |

Description |

|---|

| kafka.AlterConfigs |

A Kafka configuration is being

altered/updated. |

| kafka.Authentication |

A client has connected to the Kafka cluster

using an API key or token. |

| kafka.CreateAcls |

A Kafka broker ACL is being created. |

| kafka.CreatePartitions |

Partitions are being added to a topic. |

| kafka.CreateTopics |

A topic is being created. |

| kafka.DeleteAcls |

A Kafka broker ACL is being deleted. |

| kafka.DeleteGroups |

A Kafka group is being deleted. |

| kafka.DeleteRecords |

A Kafka record is being deleted. |

| kafka.DeleteTopics |

A Kafka topic is being deleted. |

| kafka.IncrementalAlterConfigs |

A dynamic configuration of a Kafka broker

is being altered. |

| kafka.OffsetDelete |

A committed offset for a partition in a

consumer group is being deleted. |

All Confluent Cloud audit log messages are captured in the audit log topic,

confluent-audit-log-events.

The following example shows an authentication event that was sent when service

account 306343 used the API Key MAIDSRFG53RXYTKR to connect to the

Kafka cluster lkc-6k8r8q:

{

"id": "29ca0e51-fdcd-44bd-a393-43193432b614",

"source": "crn:///kafka=lkc-6k8r8q",

"specversion": "1.0",

"type": "io.confluent.kafka.server/authentication",

"datacontenttype": "application/json",

"subject": "crn:///kafka=lkc-6k8r8q",

"time": "2020-12-28T22:41:43.395Z",

"data": {

"serviceName": "crn:///kafka=lkc-6k8r8q",

"methodName": "kafka.Authentication",

"resourceName": "crn:///kafka=lkc-6k8r8q",

"authenticationInfo": {

"principal": "User:306343",

"metadata": {

"mechanism": "SASL_SSL/PLAIN",

"identifier": "MAIDSRFG53RXYTKR"

}

},

"result": {

"status": "SUCCESS",

"message": ""

}

}

}

Audit log content

The following example shows the content of an audit log message that was triggered

by an authorization event when user 269915 tried to create an ACL on Kafka

cluster lkc-6k8r8q, and was allowed to do so because as a super user, they

had the Alter Cluster permission:

{

"id": "7908ccba-dfc0-42cc-825a-efb329b8c40c",

"source": "crn:///kafka=lkc-6k8r8q",

"specversion": "1.0",

"type": "io.confluent.kafka.server/authorization",

"datacontenttype": "application/json",

"subject": "crn:///kafka=lkc-6k8r8q",

"time": "2020-12-28T22:39:13.680Z",

"data": {

"serviceName": "crn:///kafka=lkc-6k8r8q",

"methodName": "kafka.CreateAcls",

"resourceName": "crn:///kafka=lkc-6k8r8q",

"authenticationInfo": {

"principal": "User:269915"

},

"authorizationInfo": {

"granted": true,

"operation": "Alter",

"resourceType": "Cluster",

"resourceName": "kafka-cluster",

"patternType": "LITERAL",

"superUserAuthorization": true

}

}

}

Configuring Confluent Cloud audit logging

Because audit logging is enabled by default (for dedicated clusters only), the

only configuration task required is to consume from the audit log topic.

Note

The audit log topic is created when the first auditable event occurs.

Consume with CLI

Log in to your Confluent Cloud organization using the Confluent Cloud CLI.

Run audit-log describe to identify which resources to use.

ccloud audit-log describe

+-----------------+----------------------------+

| Cluster | lkc-yokxv6 |

| Environment | env-x11xz |

| Service Account | 292163 |

| Topic Name | confluent-audit-log-events |

+-----------------+----------------------------+

Specify the environment and cluster to use (using the data that you

retrieved in the previous step).

ccloud environment use env-x11xz

ccloud kafka cluster use lkc-yokxv6

If you have an existing API key and secret for audit logs, you can use them

as shown here:

ccloud api-key store <API_KEY> <SECRET> --resource lkc-yokxv6

Important

There is a limit of 2 API keys per audit log cluster. For details about

creating, editing, and deleting API keys, refer to API Keys.

Otherwise, create a new API key. Save the API key and secret.

ccloud api-key create --service-account 292163 --resource lkc-yokxv6

ccloud api-key use <API_KEY> --resource lkc-yokxv6

Note

Be sure to save the API key and secret. The secret is not retrievable later.

Consume audit log events from the audit log topic.

ccloud kafka topic consume -b confluent-audit-log-events

Refer to

ccloud kafka topic consume

for details about the flags you can use with this command.

To view the API keys that exist for the audit log cluster:

ccloud api-key list --resource lkc-yokxv6

To delete an API key:

ccloud api-key delete NFHU56EJVPMXC6HC

New connections using a deleted API key are not be allowed. You can rotate

keys by creating a new key, configuring your clients to use the new key, and

then deleting the old one.

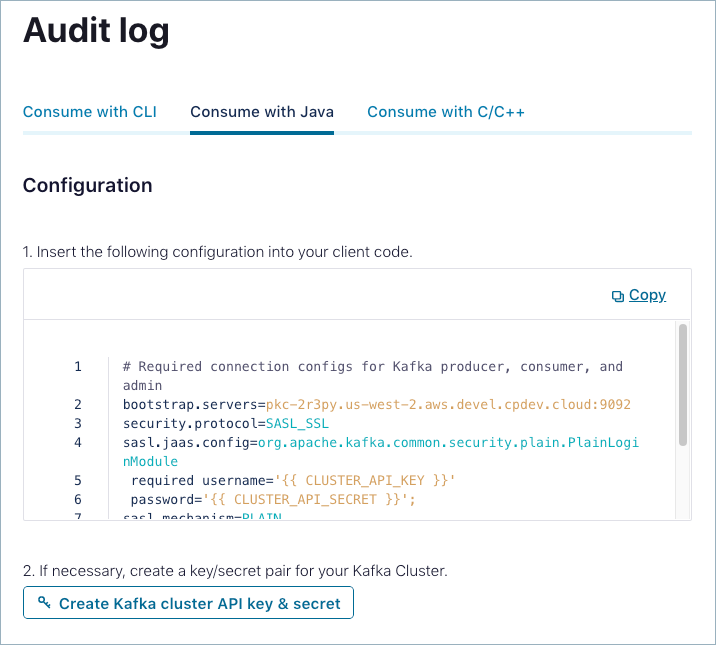

Consume with Java

Sign in to Confluent Cloud at https://confluent.cloud.

Navigate to ADMINISTRATION -> Audit log.

On the Audit log page, click the Consume with Java tab.

Copy and paste the provided configuration into your client.

If necessary, click Create Kafka cluster API key & secret to create a

key/secret pair for your Kafka cluster.

Start and connect to the Java client.

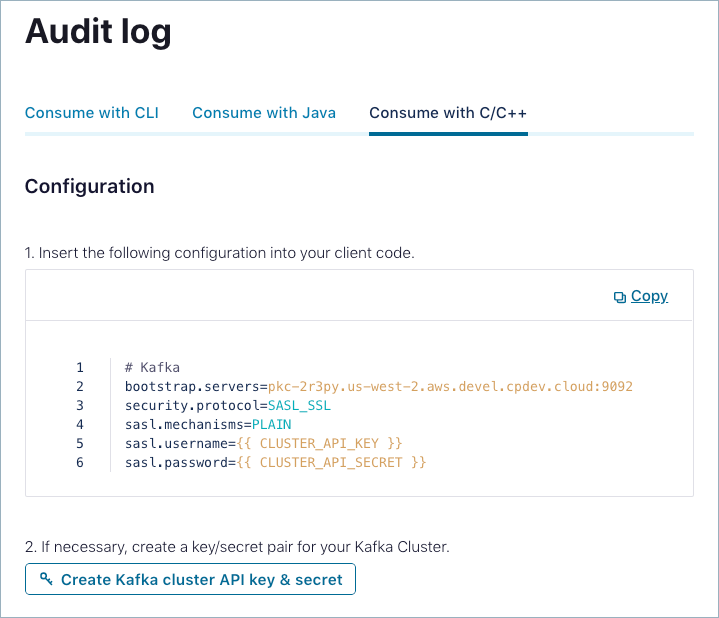

Consume with C/C++

Sign in to Confluent Cloud at https://confluent.cloud.

Navigate to ADMINISTRATION -> Audit log.

On the Audit log page, click the Consume with C/C++ tab.

Copy and paste the provided configuration into your client.

If necessary, click Create Kafka cluster API key & secret to create a

key/secret pair for your Kafka cluster.

Start and connect to the C/C++ client.